Intel AI Exec Gadi Singer: Partners 'Crucial' To Nervana NNP-I's Success

'As we are working with [cloud service providers], we are also bringing it to a point where it will be available for larger scale, and this is where our partners are crucial for success,' Intel's Gadi Singer says of the chipmaker's new chip for deep learning inference workloads.

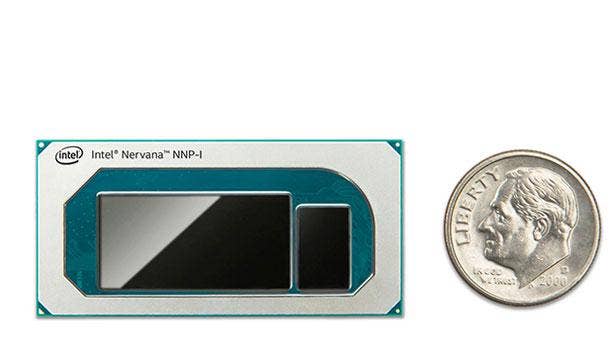

New Chip Has Higher Compute Density Than Nvidia's T4 GPU

Intel artificial intelligence executive Gadi Singer said the chipmaker's channel partners will play a "crucial" role in the success of the new Nervana NNP-I chips for deep learning inference workloads.

"The first wave of engagements that we have are with some of the large cloud service providers, because they are very advanced users of that and they use it by scale," Singer, a 36-year company veteran, told CRN in an interview. "But as we are working with them, we are also bringing it to a point where [...] it will be available for larger scale, and this is where our partners are crucial for success."

[Related: Nvidia's Jetson Xavier NX Is 'World's Smallest Supercomputer' For AI]

As vice president of Intel's Artificial Intelligence Products Group and the newly formed Inference Products Group, Singer has played a central role in development of the NNP-I1000, which was revealed alongside the Nervana NNP-T1000 chip for deep learning training at the Intel AI Summit on Tuesday.

Inference is a critical part of deep learning applications, taking neural networks that have been trained on large data sets and bringing them into the real world for on-the-fly decision making. This has created an opening for Intel and other companies to create specialized deep learning chips — a market that is forecast to reach $66.3 billion in value by 2025, according to research firm Tractica.

From a competitive standpoint, the chipmaker said a 1U server rack containing 32 of its NNP-I chips provide nearly four times the compute density of a 4U rack with 20 Nvidia T4 inference GPUs. In a live demo at the summit, the NNP-I rack was processing 74,432 images per second per rack unit while the Nvidia T4 rack was processing 20,255 images per second per rack unit.

"The fact that it's high power efficiency allows us to reach density and very good total cost of ownership," Singer said.

What follows is an edited transcript of CRN's interview with Singer, who talked about the NNP-I's target use cases, how Intel plans to accelerate adoption, what pain points it plans to address for businesses running deep learning workloads and how it differs from Xeon's deep learning capabilities.

What would your elevator pitch for the NNP-I be?

It's leadership, performance and power efficiency for scale and real-life usages. And if I unpack it a little bit, we did build it for power efficiency, because if you look at the NNP-I, it is small in scale, and it ranges from 10 watts to 50 watts. So it can come either in a very small [form] factor, like the one that I'm holding, which is the M.2 form factor, all the way to larger systems with two on a PCIe card.

So the fact that it's high power efficiency allows us to reach density and very good total cost of ownership. The other point about scale and real-life [usages] is the diversity of usages. When we designed it, we made sure that we're looking at different types of topologies. We're looking at vision-oriented topologies that are image recognition or other analysis of images. We were looking at language-based topologies. So there's a whole field of natural language processing, or NLP, machine translation from language to language, speech. And another space is recommendation systems.

Recommendation systems are very different from the other two. And when we build it, we build it for a variety of usages, both so that we can address multiple usages but also because the nature of the problem is changing so rapidly that by the time you have a solution, it's for a different problem. So we built it for future problems as they come up.

Another aspect that is important for real-life usages is the versatility for heterogeneous workloads. There are very few workloads or usages that are purely deep learning. Usually there is a business workload, which does whatever, medical imaging, transportation, industrial, whatever the usage is. And then you want to enrich it with deep learning. So when you look at their real-life application, unlike a proxy benchmark, you have very heterogeneous code. You have portions that are deep learning, portions that are decisions, some other general purpose.

So we built it as a set of capabilities on-die, including things that are very efficient for tensor operations, which are multi-dimensional arrays, like for vision. We have vector processing on that. We have general purpose. We have two IA [Intel architecture] cores of that. And we have lots of memory, with 75 MB of memory. And the importance of memory is for power efficiency.

Moving data is more expensive than calculating it. [That] might sound counterintuitive, but, actually, because silicon is so efficient in doing multiplication and addition and others, it is almost 10 [times] more expensive to bring data to the right place then to compute it. So we spent a lot of architectural and design thought about how to bring data once, keep data and manipulate it, compute it with as little movement as possible, and this is why we have so much of the memory on die.

Why is the increased compute density of NNP-I so important?

Density is important in several respects. One is ability to fit it in [a] space, so if you have an infrastructure, and you want to expand and create significantly additional inference compute capability, it allows you to expand more easily, because it requires less space.

It also allows you within the same rack to create better combinations, because you have high density inference that you can add to the other aspect of compute that you want to put within the rack. And the other aspect of density is that you can use it in other places, not only in the 1U in the rack, but you can use it in appliances, you can use it in other places where density is really important.

What are the form factors for the NNP-I?

Today we're showing three form factors. We have the M.2, which can go into a socket that you're using for SSDs or for other accelerators, which is used in data centers but also in other places as well. We have a PCIe card. And then you have this 1U ["ruler" form factor]. But we are working on other form factors. Because of the small dimensions and the power efficiency, it can fit in many other form factors as well.

Could you technically fit it into a small edge computer?

You could, but our primary objective is to look at aggregation points and the data center. So if you would like to put some inference capability on a security camera, on a drone, you would use a [Movidius] VPU. So when you look at the whole range from a couple of watts all the way to tens of watts or higher, and you look at the size of [AI] models, we have the VPU and the NNP-I to cover the whole range.

Intel has the pod reference architecture for NNP-T. What is Intel doing for the NNP-I in terms of providing reference architecture or helping accelerate designs with NNP-I? When I think of what Nvidia is doing, they have EGX, for example, for edge servers.

The three form factors that we talked about and the other ones that that we'll talk about in another day are providing solutions. Some of those are reference solutions, some of those [will come from] work with ODMs to create solutions that can be used. So this is something that that will be available in terms of systems in various form factors.

The other important thing that we're doing is software, because when you're looking at integrating into systems a major factor is software. So we're working on software that takes advantage of the popular deep learning frameworks, so TensorFlow and others will have a path into an API, so that you're developing your solution, you can run it on Xeon and then you can decide over time to migrate it or maybe part of it, the parts you want to accelerate to NNP-I. So software development is a very important aspect of creating a platform for users.

You were talking about the differences between Movidius and NNP-I for inference, but Intel also has the Xeon Scalable processors that come with Deep Learning Boost for inference acceleration. So how do you help customers understand the differences between each product's inference capabilities?

There are cases where Xeon is absolutely the best solution to use. When you start your journey, Xeon is the best environment. Everybody's familiar with that. They have the environment. So when you start, you start with Xeon. There are many customers where the usage of deep learning is so interspersed with the other non-deep learning [workloads] that they have a mix. And when you have a mix, Xeon is a great solution, because DL Boost accelerates your deep learning portion, and it is the best general-purpose solution. So when you have a high mix of those, it is best.

When you have very large models, there are some applications where the size requires the full memory hierarchy of Xeon. That's also a great use for Xeon. So there are lots of usages where Xeon is, whether on the [first] step of the journey or for your final use, the best environment.

There are cases where you would like to deploy an accelerator, where you are using an extensive 24/7 acceleration and you want to tune it for acceleration. You would like to use a system that integrates the acceleration as part of the overall solution together with Xeon. So we make sure that we build an uncompromised acceleration for that part, and we provide the software to allow you to run it together.

What would you consider some of the pain points that businesses were facing that led to how the NNP-I was designed?

I think that the primary challenge for businesses is adopting deep learning in a fast manner in a way that enhances their application. And only now there's enough understanding to see how we can integrate it almost any application. There's a blog that I can point you to that's called the Four Superpowers of Deep Learning, which talks about how you can take deep learning almost to any technology, to either identify patterns where you don't have any model or accelerate compute when you do have models or generate new content based on examples or translate from one sequence to another.

So the biggest challenge is for organizations to integrate data scientists, integrate people who are deeply familiar with a new technology, together with all the traditional strength and knowledge in their domain expertise, and finding how, between those two technologies, they can enhance whatever they're doing in a way that takes full advantage of that.

There are other challenges. How do you create enough data? And how do you clean the data and tag the data, so you can use new data to do it? So those challenges are what sets the pace for businesses from all Industries to take advantage of them. Once they do it, we will provide them — we already are providing them with a path to accelerate it. And based on the sophistication, it could be in automatic mapping, so that they can just use it from the framework of choice onto the acceleration. Or it could allow them tools to go down if you have the expertise and fine-tune it to a even higher utilization.

How important are Intel's channel partners to selling the NNP-I as a solution?

Very important — in a bit. And the reason is because the first wave of engagements that we have are with some of the large cloud service providers, because they are very advanced users of that and they use it by scale. But as we are working with them, we are also bringing it to a point where [...] it will be available for larger scale, and this is where our partners are crucial for success.

I noticed that you had a change in title earlier this year. In addition to being vice president of the Artificial Intelligence Products Group, you are now also general manager of the Inference Products Group. Is that a new group that was formed within Intel?

Yes, so until about half year ago, I was the GM of the architecture group within the AI Products Group, and I was driving the various architectures for AI. As we get closer to product, we reorganized it to make sure that we have focused on those products going to market, meeting all customer needs, getting deployed. So we created the Inference Products Group, and I'm leading it as GM.

How many people work in that group?

We're not talking about particular numbers, but what I would say is that I have as many more people working software as I do in hardware. So we invest, from experience, on how many people it takes to do designs like that, and our focus on top of it is creating a software stack that allows mapping any type of topology onto the hardware.