The 10 Hottest New Big Data Technologies Of 2019

Products that offer new ways to capture, manage, navigate and analyze data were among the hot technologies in the big data arena this year.

Information Inundation

Businesses and organizations today need a broad range of sophisticated tools and technologies to manage – and derive value from – big data. That includes systems to capture, transform, manage and analyze exponentially growing volumes of data – including data generated by new sources such as Internet of Things networks.

Spending on big data and business analytics products is expected to reach $210 billion in 2020, according to market researcher IDC, up from $150.8 billion in 2017.

Here’s a look at 10 breakthrough technologies that caught our attention in 2019.

Get more of CRN's 2019 tech year in review.

AWS Lake Formation

Data lakes are huge stores of unorganized data, often of different types and formats. By removing data silos and providing a way for data to reside in a central place, analysts and information workers can more easily perform analytics and machine learning across all their data.

In August, AWS launched AWS Lake Formation, a fully managed service for building, securing and managing data lakes. The service automates many of the complex manual steps required to assemble a data lake including provisioning and configuring data storage, collecting, cleaning and cataloging data and securely making the data available for analytics.

Data in AWS Lake Formation can be analyzed using AWS’ other services including Redshift, Athena and Glue.

Cloudera Data Platform

Cloudera merged with rival big data software vendor Hortonworks at the start of 2019 and began the complex process of combining the two companies’ technology portfolios.

In September, Cloudera debuted the Cloudera Data Platform, an integrated data platform for data management and governance, providing self-service analytics and artificial intelligence applications across hybrid and multi-cloud environments. Cloudera Data Platform replaces its own pre-merger software and the Hortonworks Data Platform, incorporating technology from both systems.

Cloudera also unveiled new applications that run on the Cloudera Data Platform: Cloudera Data Warehouse, Cloudera Machine Learning and Cloudera Data Hub. Other applications for operational databases, streaming data and data engineering are in the works.

Confluent ksqlDB

Businesses and organizations are increasingly working with streaming, real-time data. Confluent, which markets an event streaming platform for organizing and managing real-time data, was founded by the developers of the Apache Kafka stream processing technology upon which the Confluent system is built.

In November, Confluent launched ksqlDB, an event streaming database that’s purpose-built for developers to create applications that take advantage of Kafka’s stream processing capabilities. The database, according to Confluent, simplifies the application architecture needed to build event streaming, real-time applications. The ksqlDB database uses pull queries and embedded connectors to accelerate application development.

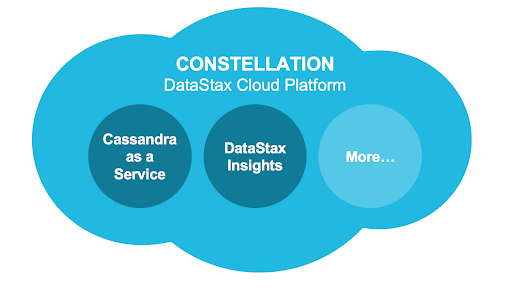

DataStax Constellation

DataStax develops a distributed cloud database built on the open-source Apache Cassandra database. In May, at its inaugural DataStax Accelerate conference, the company debuted the DataStax Constellation cloud platform with services for developing and deploying cloud applications architected for Apache Cassandra.The platform initially offers two services: DataStax Apache Cassandra-as-a-Service and DataStax Insights, the latter a performance management and monitoring tool for DataStax Constellation and for DataStax Enterprise, the vendor's flagship database product.

Domo IoT Cloud

Fast-growing business intelligence vendor Domo launched Domo IoT Cloud in March, offering a service that uses the popular Domo cloud platform to help users capture, visualize and analyze real-time data generated by connected Internet of Things devices.

Domo IoT Cloud includes a set of pre-built connectors to link Domo to data sources such as AWS IoT Analytics, Azure IoT Hub, Apache Kafka and others. It also includes the first two of an expected series of IoT-focused applications: The Domo Device Fleet Management App for organizing fleets of devices and the Production Flow App for monitoring the production process of shop floor devices.

Immuta Automated Data Governance Platform

Data governance, the need to adhere to data security, legal and compliance policies, is a major challenge for IT organizations. Immuta's Automated Data Governance Platform provides no-code, automated data governance capabilities that enable business analysts and data scientists to securely collaborate using shared data, dashboards and scripts without the fear of violating data usage policies and industry regulations.

The Immuta system ensures compliance with the European General Data Protection Regulation (GDPR), the California Consumer Privacy Act (CCPA), and the Health Insurance Portability and Accountability Act (HIPAA).

Immuta also delivers automated data governance as a managed service via the AWS Marketplace.

Promethium Data Navigation System

Promethium is addressing the challenges of self-service data discovery and self-service analytics with its Data Navigation System, a data context platform that launched in October. DNS uses machine learning algorithms and natural language processing to provide users with the right data to answer the right question in just minutes by locating the relevant data, demonstrating how it needs to be assembled, automatically generating the SQL query to get the right data, and then executing the query.

The system integrates with existing databases, data warehouses, data lakes and cloud-based data sources as well as data management and business intelligence systems. DNS is provided on a Software-as-a-Service basis through a public cloud or a customer’s virtual private cloud, or through on-premises deployment.

Rockset

Rockset has developed serverless search and analytics technology to perform operational analytics in the cloud that’s not possible with traditional transactional databases or data warehouses.

The Rockset system enables real-time SQL queries on terabytes of NoSQL data, providing a fast and simple way to ingest and query data to serve real-time APIs and live dashboards. The software works with data in a variety of sources including Amazon S3, Amazon DynamoDB, Amazon Kinesis and Amazon Redshift; Apache Kafka; and Google Cloud Storage.

Splunk Data Fabric Search and Splunk Data Stream Processor

Splunk introduced the 8.0 edition of its Splunk Enterprise software earlier this year with two breakthrough capabilities, Data Fabric Search and Data Stream Processor, that take the Splunk platform to the next level.

Splunk Data Fabric Search speeds up complicated queries on massive data sets, even when the data is spread across multiple data sources. The technology leverages the distributed processing power of external compute engines such as Apache Spark to broaden the scope and capability of Splunk Enterprise.

Splunk Data Stream Processor continuously collects high-velocity, high-volume data from diverse sources and distributes insights to multiple destinations in real time. The software can perform complex transformations of the data during the processing stage before the data is indexed.

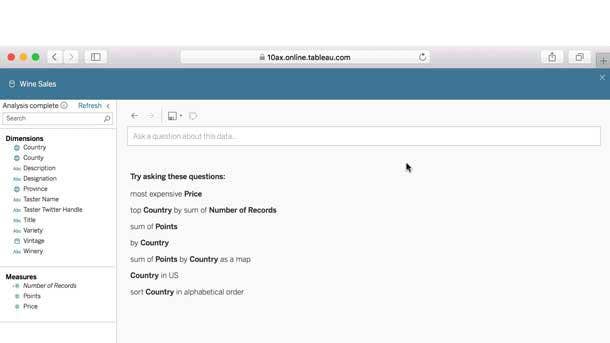

Tableau Ask Data

Tableau made a big jump into the realm of natural language processing (NLP) this year when it added Ask Data to its Tableau Server and Tableau Online analytical software. Ask Data makes it possible for everyday information workers to query data in a conversational manner, a capability that allows more people to engage with the business analytics platform and develop analytical insights without the need to learn data dimensions, structures or measures.

Ask Data is based on NLP technology Tableau acquired when it bought ClearGraph in August 2017.