The 10 Coolest New Big Data Tools Of 2020

With data volumes growing at a rapid clip, accessing, managing and analyzing big data has become a monumental task. Here are 10 big data tools that debuted in 2020 that can help.

Big Data, Big Impact

See the latest entry: The 10 Hottest Big Data Tools of 2022

The volumes of data being collected by businesses and organizations is growing exponentially and that data is increasingly scattered across on-premises, cloud, hybrid-cloud and multi-cloud systems. The result: Managing, accessing and utilizing all that data is an increasingly complex task.

IT vendors, both startups and established players, are developing the next generation of platforms and tools for managing big data and making it accessible to analysts and information workers.

Here are 10 big data platform and tool offerings that caught our attention in 2020.

Some of the featured products are new cloud Database-as-a-Service platforms and related software. That’s no surprise: In a July 1 report market researcher Gartner said that by 2022, 75 percent of all databases will be deployed or migrated to a cloud platform, largely for databases used for business analytics and for Software-as-a-Service applications.

Get more of CRN’s 2020 tech year in review.

Alluxio Data Orchestration Platform

Data management and storage systems, and the applications and analytical tools that need access to them, are increasingly dispersed across on-premises, hybrid-cloud and multi-cloud systems.

The Alluxio Data Orchestration Platform is designed to link data-driven applications, such as operational applications, AI tools and business analytics software, with on-premises and cloud databases, Hadoop-based data lakes, Amazon S3 and Google Cloud Storage, and more – no matter where they reside.

Alluxio founder and CEO Haoyuan Li developed the company’s core technology in 2014 as part of Project Tachyon at U.C. Berkeley.

In October Alluxio debuted release 2.4 of its software with new tools and expanded functionality for connecting data sources, including an expanded metadata service, the new Alluxio Data Orchestration Hub management console for hybrid and multi-cloud systems, and support for additional cloud-native deployments.

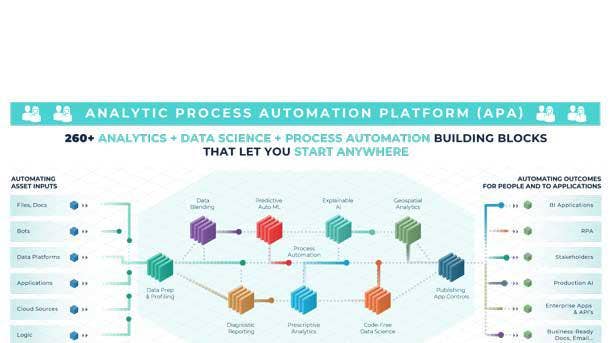

Alteryx Analytic Process Automation Platform, Analytics Hub and Intelligence Suite

In May, Alteryx debuted the Alteryx Analytic Process Automation (APA) Platform, an end-to-end data science, machine learning and analytics process automation system used by analysts and data scientists to prepare, blend, enrich and analyze data.

In June the company extended the capabilities of the Alteryx APA Platform with the Alteryx Analytics Hub and Alteryx Intelligence Suite.

Analytics Hub works with the Alteryx APA Platform to consolidate analytic assets into one system where they can be accessed by data workers and shared for collaborative tasks. Intelligence Suite, part of the Alteryx APA Platform 2020.2 update, works with Analytics Hub and Alteryx Designer to help users without a data science background build their own predictive models.

Aparavi Data Intelligence & Automation Platform

Expanding beyond its file backup and data protection roots, Aparavi in March debuted the Aparavi Data Intelligence & Automation Platform that’s used to find, classify, automate and govern distributed data across on-premises and cloud systems.

The cloud-based platform is used for a range of big data management tasks including data discovery, data retention and access, data storage and protection, and data governance, risk and compliance management. The system provides business analytics, machine learning and collaboration tools with access to distributed data, helping users transform it into a competitive asset.

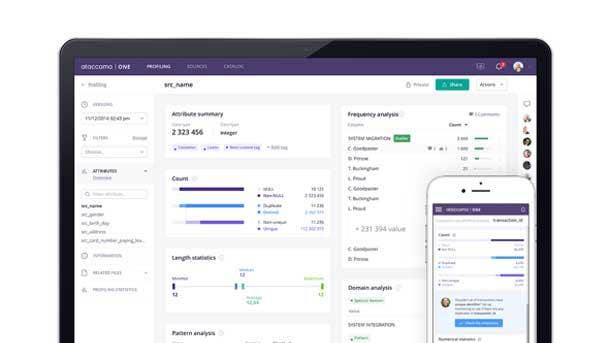

Ataccama One

In November, Toronto-based Ataccama unveiled the second generation of its Ataccama One platform that consolidates data management and data governance functions into a single system with the aim of maintaining data integrity throughout an organization.

Ataccama One performs a raft of functions that businesses and organizations generally rely on separate tools – if not manual processes – to perform, including data quality, master data management, data catalog, data governance, data integration and other data management tasks.

The second-generation of the software, slated to be available in February 2021, integrates the software’s functions into a unified fabric that is stitched together using metadata. The new release leverages AI and machine learning to automate more data management tasks. And it offers new capabilities for processing data across complex ecosystems of on-premises, multi-cloud and hybrid IT environments.

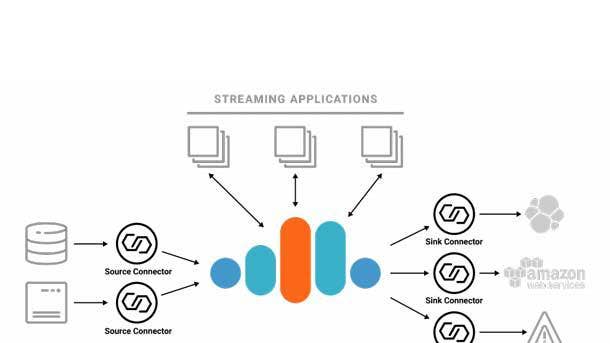

Confluent Cloud

Confluent is a pioneer in event streaming software, marketing a commercial product based on the ground-breaking Kafka stream-processing software that was originally developed by Confluent’s founders. Confluent’s software is fast becoming a key technology within many organizations’ real-time data processing systems.

Under the name Project Metamorphosis Confluent developed Confluent Cloud, a fully managed Kafka service that runs on AWS, Microsoft Azure and Google Cloud Platform and is available through all three major cloud marketplaces.

The Confluent Cloud service makes it easier for businesses and organizations to quickly build event-streaming applications without the need to build and maintain custom point-to-point streaming data connections or manage related infrastructure.

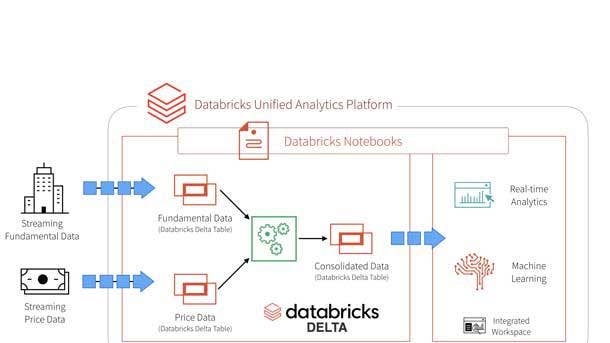

Databricks Delta Engine and SQL Analytics

Fast growing Databricks, developer of the Databricks Unified Data Analytics Platform, expanded its technology portfolio in June with the Databricks Delta Engine, a high-performance query engine for cloud-based data lakes. The new software is expected to help businesses get more insights and value out of data lakes – huge stores of unorganized data.

Databricks developed Delta Lake, a data storage technology layer that runs on data lakes, in 2017 and donated the technology to the Linux Foundation as an open-source project. The new Delta Engine, a commercial product, works in conjunction with Delta Lake to enable fast query execution for data analytics and data science without having to move data out of a data lake

In November Databricks took its data lake offerings to the next level and launched SQL Analytics, software for running SQL analytic queries directly on data lakes – a significant move given that data lakes often contain huge volumes of unorganized, often unstructured data. SQL Analytics essentially turns data lakes into data warehouses – what Databricks calls “data lakehouses.”

Logi Analytics Logi Composer

Data analysis is traditionally carried out apart from business processes. Business analysts and information workers use data analytics tools to generate the information and insights they need, then apply that to their work. But what if the analytical capabilities were built right into the operational applications that power those business processes?

That’s the idea behind Logi Analytics’ Logi Composer development platform, which provides a way for ISVs and corporate developers to build self-service business analysis capabilities directly into commercial and in-house applications and workflows.

Logi Composer, which debuted in June, is used to design, build and embed interactive dashboards and data visualizations into applications and develop connections to popular data sources that underlie the applications. The tool’s back-end query processing is powered by the Smart Data Connectors technology Logi Analytics acquired in 2019 when it bought Zoomdata.

MariaDB SkySQL

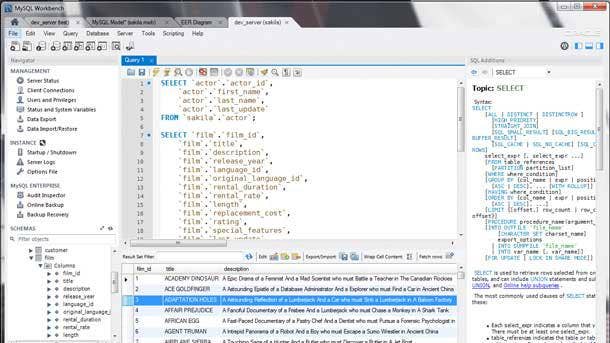

MariaDB develops a popular open-source SQL database based on the MySQL database platform. This year the company launched MariaDB SkySQL, a fully managed cloud Database-as-a-Service edition of MariaDB that can perform both transactional processing and data analytics in a single database.

SkySQL incorporates the entire MariaDB platform, including MariaDB Enterprise Server, MariaDB ColumnStore and MariaDB MaxScale. The cloud-native system uses Kubernetes for container orchestration; the ServiceNow workflow engine for inventory, configuration and workflow management; Prometheus for real-time monitoring and alerting; and the Grafana open-source analytics and visualization application for data visualization.

Later in 2020 MariaDB added to SkySQL the ability to customize database options and configurations to meet enterprise-class security, high-availability and disaster recovery requirements.

Oracle MySQL Database Service

Oracle acquired the popular MySQL database in 2010 when it bought Sun Microsystems, which had acquired MySQL in 2008.

In December Oracle announced the general availability of MySQL Database Service, a fully managed cloud-based database service that the software giant is pitching as a high-performance, lower-cost alternative to other cloud databases such as the AWS RDS database, Google Cloud SQL and Microsoft Azure SQL database.

While the availability of MySQL as a cloud service is itself significant, Oracle also added the MySQL Analytics Engine to the database. MySQL has traditionally been used for running transaction processing applications, but the addition of the new analytics engine creates a unified database platform for running both OLTP and OLAP analytical workloads.

Splunk Observability Suite

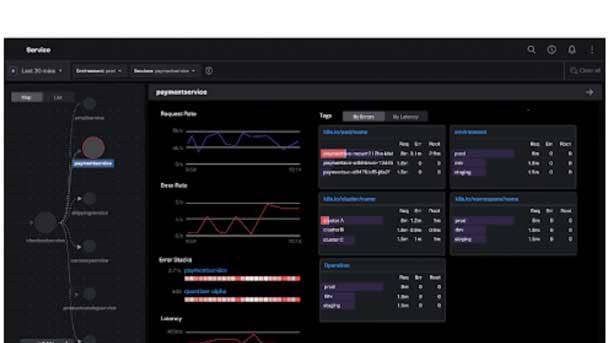

IT system monitoring and management has been one of the biggest markets for Splunk’s machine data management platform. In October, at its virtual .conf20, the company expanded its offerings in that arena when the company debuted the Splunk Observability Suite, a comprehensive line of software for IT and DevOps teams for monitoring, investigating and troubleshooting applications.

Splunk is positioning the suite as a critical set of tools for businesses moving IT workloads to the cloud and undertaking digital transformation initiatives.

The Observability Suite incorporates a number of existing Splunk products for infrastructure monitoring, application performance monitoring, digital experience monitoring, log investigation and incident response into a tightly integrated whole.

The suite also includes technology from two recent acquisitions: Plumbr, an Estonia-based developer of application performance monitoring tools; and Rigor, an Atlanta developer of digital experience monitoring software.