AMD Chips Away At Intel In World’s Top 500 Supercomputers As GPU War Looms

AMD’s CPU share of the world’s top 500 supercomputers nearly doubles to 21 while Intel loses share. At the same time, both chipmakers are plotting new challenges to Nvidia’s data center GPUs that are becoming more prevalent in high-performance computing systems.

AMD’s influence in the world’s top 500 supercomputers is continuing to grow, chipping away at Intel’s dominance, as both companies plot new challenges to Nvidia’s GPU throne.

This is according to an updated list of the world’s fastest supercomputers that was released Monday during the virtual Supercomputing 2020 conference by its eponymous organization, Top500.

[Related: The $35B AMD-Xilinx Acquisition: 7 Big Things To Know]

The Fugaku supercomputer in Japan, which runs on Arm-based Fujitsu CPUs, kept the No. 1 slot that was awarded with the system’s debut on the list over the summer, but there was only one new supercomputing running Arm-based processors added to the list in the fall 2020 update.

AMD’s CPU share of the top supercomputers nearly doubled to 21 from 11 in the summer, which includes one system using Hygon Dhyana processors that use AMD technology as part of a Chinese joint venture. The chipmaker’s growth over the last few months came from new systems with second-generation EPYC processors.

While 29 of the 44 new systems on the Top500 list use Intel processors, mostly from the second-generation Xeon Scalable lineup, the chipmaker lost share overall, going from 470 systems to 459 over the last few months. The number of overall systems with second-generation Xeon Scalable CPUs also declined, from 72 to 54.

As for accelerators and co-processors, Nvidia continued to dominate with its GPUs now inside 141 of the world’s top supercomputers, up from 135 in the summer. While systems with the company’s previous-generation V100 GPUs drove most of that growth, the number of systems with the chipmaker’s new A100 GPUs grew to two, with Nvidia’s A100-based Selene supercomputer going up in rank from 7 to 5, thanks to the addition of new systems in the cluster.

AMD showed no growth on the GPU side, with only one system using its GPUs. Three systems using Intel’s depreciated Xeon Phi accelerators remained on the list.

However, Nvidia is set to face greater competition, particularly in high-performance computing.

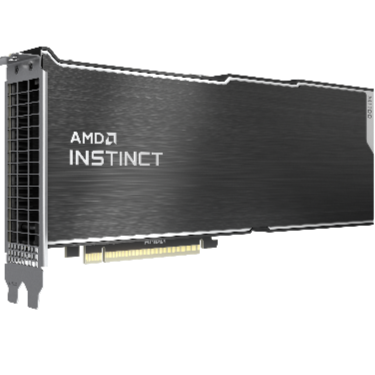

On Monday morning, AMD revealed its new Instinct MI100 server GPU, calling it the “world’s fastest HPC accelerator for scientific research,” thanks to its ability to surpass the 10 teraflops barrier for double-precision floating point performance.

The new GPU comes with 32GB of high-bandwidth HBM2 memory and improves half-precision floating-point performance for AI training workloads by nearly seven times over the company’s previous generation of accelerators, thanks to its new Matrix Core technology.

That Matrix Core technology is part of AMD’s new CDNA architecture that is designed for HPC and machine learning workloads. Future iterations of the architecture will be used for next-generation Instinct GPUs that will go into two of the U.S. Department of Energy’s first exascale supercomputers, Frontier and El Capitan, which are set to launch in 2021 and 2023, respectively.

“It‘s AMD’s flag plant marking the path to exascale,” Brad McCredie, corporate vice president of data center GPU and accelerated processing AMD, told CRN.

Along with the launch of MI100, AMD announced that its ROCm developer software now has an open-source compiler that can support OpenMP 5.0 and HIP. The software can now also deliver faster performance for applications running PyTorch and Tensorflow frameworks.

Intel, on the other hand, plans to release an HPC-focused GPU, code-named Ponte Vecchio, in late 2021 or early 2022 as part of a broader rollout of discrete GPUs for different markets. That GPU is set to be used by the U.S. Department of Energy’s other exascale supercomputer, Aurora.

In Intel’s move to a heterogeneous compute portfolio that includes GPUs, the company last week announced its first discrete GPU for servers, the Intel Server GPU, which is based on the company’s Xe low-power microarchitecture that also powers Intel graphics for laptops and is meant for Android cloud gaming and high-density media transcode workloads.

Alongside the new GPU, Intel said the gold version of its oneAPI toolkits will launch in December, which will make it easier for developers to optimize software for the company’s broader portfolio of CPUs, GPUs, FPGAs and other kinds of accelerators.

“Today is a key moment in our ambitious oneAPI and XPU journey. With the gold release of our oneAPI toolkits, we have extended the developer experience from familiar CPU programming libraries and tools to include our vector-matrix-spatial architectures,” said Raja Koduri, who is senior vice president, chief architect and general manager of Intel’s Architecture, Graphics and Software group.

But Nvidia isn’t slowing down by any means. The company made its own announcement Monday, revealing the new A100 80GB GPU, which doubles the high-bandwidth memory capacity of the original A100 SXM GPU that launched earlier this year.

“When you combine the world‘s fastest GPU with the world’s highest memory bandwidth and all the optimizations in our software platform, the results are dramatic performance and efficiency gains,” said Paresh Khaya, , senior director of product management for accelerated computing at Nvidia.

Alexey Stolyar, CTO of International Computer Concepts, a Northbrook, Ill.-based system builder, said his HPC customers care about the number of “flops,” or floating operations per seconds, GPUs can provide, which is important because CPUs alone cannot provide that level of performance.

For instance, he said, he is working with a customer that needs to reach 400 teraflops.

“That number is kind of hard to reach with CPUs, you really need that GPU horsepower to get there,” Stolyar said.

While Nvidia has dominated customer conversations and deployments to date for GPU-accelerated servers, Stolyar said he sees some pockets of interest for AMD’s offerings.

But for greater adoption of new GPUs from Intel and AMD to happen, he added, both companies need to ensure their respective tool sets can compete with Nvidia’s CUDA platform.

“If performance is great and the numbers are great, it’s going to be interesting to see how they do the tool sets,” he said.